I enjoyed Luis von Ahn‘s Google tech talk on human computation. Human computation is not something new, as always, somebody else worked on it. From wikipedia:

In computer science, human-based computation is a technique when a computational process performs its function via outsourcing certain steps to humans. This approach leverages differences in abilities and alternative costs between humans and computer agents to achieve symbiotic human-computer interaction. In traditional computation, a human employs a computer to solve a problem: a human provides a formalized problem description to a computer, and receives a solution to interpret. In human-based computation, the roles are often reversed: the computer asks a person or a large number of people to solve a problem, then collects, interprets, and integrates their solutions.

In the talk, Prof. von Ahn presented a couple of interesting statistics: first humans spend an horrible amount of time playing solitaire, a game as useless as that (btw, I am not part of this statistic): in 2003, about 9 billion human-hours were spent on this game. By contrast, only 7 million human-hours were spent building the Empire State Building. Von Ahn pointed out that if we could create games that besides being entertaining could also have a purpose, then we could be much better off.

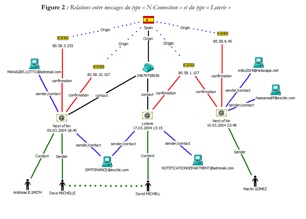

Another premise for his work is the fact that there are lots of computational problems that are unsolved, as for instance, the spam and how to prevent illicit use of network resources. Spammers use bots to register thousands fake email addresses from which spreading spam mail. Distinguish a bot from a real human is an example of unsolved computational problem. To circumvent this it is nowadays a standard to use CAPTCHA: we take a string of random letters and we render these in an image in a process which is impossible to reverse … for a machine. On the other hand, humans are very good in pattern recognition, so we use this trick to distinguish a man from a machine.

The point of the talk was: what if we use this human ability to solve other problems that computer are not good in solving and that can be far more useful than preventing spam? For instance one of the unsolved problem is how to index an image. At the moment we use the keywords contained in the filename of the image. This is really dumb but sometimes works. But what if we put images in a game context and we ask players to tell us what is inside the image and we use this description to index the image? That was the point of the ESPGAME that Prof. von Ahn et al implemented. In the game two players randomly matched on the internet are asked to independently look at an image and give some keywords for that image. As soon as they agree on a keyword then that keyword is taken as good and the players are given some points.

I wont go in details here, as the video is explicit enough on the mechanisms of that and other marvelous games. The talk also reports incredible results from the running experiment: 75,000 players have provided 15 millions labels for images. Here I want to argue on another point. As Tim O’Reilly argued on the last column of Make, each game has a purpose, not only in the way suggested by von Ahn. Mr. O’Reilly says:

I liked von Ahn’s phrase, “games with a purpose,” but of course all games have a purpose, not merely those that put us to work helping out our computers. Play is so central to human experience that historian Johan Huizing suggested that our species be called Homo ludens, man the player. … What makers understand is that play is as important as work. It’s not just as we pass the time, it’s how we learn and explore. … We play to learn. What we make when we play is ourself.

I want to extend von Ahn phrase suggesting that each game should have a double purpose: we can help the machine while we learn and we make things. Playing solitaire is a waste but its relaxing and maybe fun. It is definitely not useful other than for the player. The best would be to make games which are fun, useful for the society because while we play we accomplish something, and useful for ourselves, because while we play we learn something.

I wish I could see examples of this last category. Anybody?

REFERENCES:

[1] Luis von Ahn, Shiry Ginosar, Mihir Kedia and Manuel Blum. Improving Image Search with Phetch. To appear in IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 2007.

[2] Luis von Ahn. Games With A Purpose. In IEEE Computer Magazine, June 2006. Pages 96-98.

[3] Luis von Ahn, Ruoran Liu and Manuel Blum. Peekaboom: A Game for Locating Objects in Images. In ACM Conference on Human Factors in Computing Systems, CHI 2006. Pages 55-64.

Tags: education, human computer interaction, learning technology, machine learning, pedagogy, user experience

Tags: education, human computer interaction, learning technology, machine learning, pedagogy, user experience

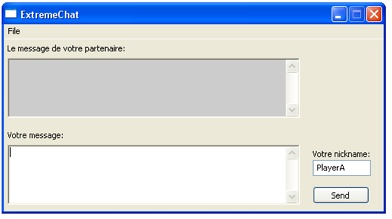

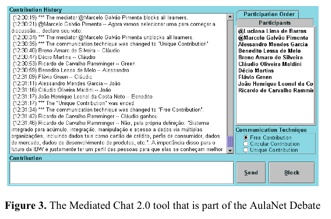

![]() Tags: human communication, human computer interaction, interaction design

Tags: human communication, human computer interaction, interaction design