L. Wenyin, S. Dumais, Y. Sun, H. Zhang, M. Czerwinski, and B. Field. Semi-automatic image annotation. In In INTERACT2001, 8th IFIP TC.13 Conference on Human-Computer Interaction, pages 326–333. Press, 2001. [PDF]

——

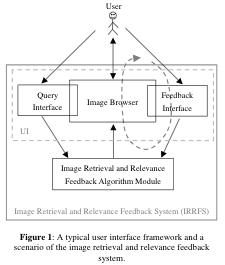

This article describe a technique to incorporate users’ feedback as annotations in a retrieval system for images. The basic argument of the authors is that manual annotation of image might be tedious to the user. Even direct annotation techniques proposed by Sneiderman do not solve the issue. On the other hand automatic annotation of images is not there yet.

Therefore they propose a ‘middle-earth’ approach to the problem asking users of an information retrieval engine to rate the results returned by the system. When results are marked positively, then the system incorporates the query terms as descriptors of the selected images.

To evaluate this architecture, the authors used two metrics: the retrieval accuracy and the annotation coverage. The annotation coverage is the percentage of annotated images in the database. The retrieval accuracy is how often the laveled items are correct. In their experiment, retrieval accuracy is the same as annotation coverage (positive examples are automatically annotated with the query keywords).

The author found that the annotation strategy is more efficient when there are some initial manual annotations. Additionally, they performed a usability test of the system (called MiAlbum) and they found that getting people to discover and use relevance feedback has been difficult. In addition, to improve the discoverability of feedback, the authors argue that we need to improve the participants’ understanding of the matching process.